Microsoft 70-463 Exam Questions

- Microsoft MCSA: SQL Server 2012/2014 Certifications

- Microsoft Certified Solutions Expert MCSE Certifications

- Topic 1: Determine the need and method for identity mappingand deduplicating/ design a data warehouse that supports many to many relationships

- Topic 2: Specify a data source and destination; use data flows; different categories of transformations/ Design and implement a data warehouse

- Topic 3: Define a connection string; parameterization of connection strings/ Plan the configuration of connection managers

- Topic 4: Determine the need for auditing or lineage; determine keys/ Implement package logic by using SSIS variables and parameters

- Topic 5: Define data sources and destinations; distinguish blocking and non-blocking transformations/ Determine the granularity of relationship with fact tables

- Topic 6: Determine the need for supporting Slowly Changing Dimensions (SCD)/ Implement dimensions; implement data lineage of a dimension table

- Topic 7: Determining the loading method for the fact tables/ Determine how much information youneed to log from a packages

- Topic 8: Implement package execution; plan and design package execution strategy/ Using columnstore indexes; partitioning; additive measures

- Topic 9: Performance tune an SSIS dataflow; optimize Integration Services packages for speed of execution/ Determine if it is appropriate to use a script task

- Topic 10: Determine whether to use SQL Joins or SSIS lookup or merge join transformations/ determine if you need support for slowly changing dimensions

Free Microsoft 70-463 Exam Actual Questions

Note: Premium Questions for 70-463 were last updated On 01-01-1970 (see below)

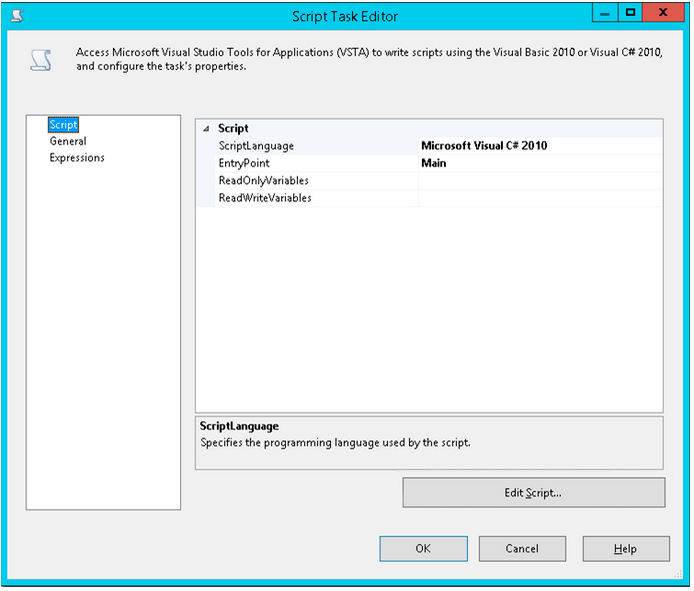

You have a SQL Server Integration Services (SSIS) package. The package contains a script task that has the following comment.

// Update DataLoadBeginDate variable to the beginning of yesterday

The script has the following code.

Dts.variables[''User::DataLoadBeginDate''].Value = DataTime.Today.AddDays(-1);

The script task is configured as shown in the exhibit. (Click the Exhibit button.)

When you attempt to execute the package, the package fails and returns the following error message: ''Error: Exception has been thrown by the target of an invocation.''

You need to execute the package successfully.

What should you do?

You add existing variables to the ReadOnlyVariables and ReadWriteVariables lists in the Script Task Editor to make them available to the custom script. Within the script, you access variables of both types through the Variables property of the Dts object.

References:

https://docs.microsoft.com/en-us/sql/integration-services/extending-packages-scripting/task/using-variables-in-the-script-task?view=sql-server-2017

You are developing a SQL Server Integration Services (SSIS) package.

To process complex scientific data originating from a SQL Azure database, a custom task component is added to the project.

You need to ensure that the custom component is deployed on a test environment correctly.

What should you do?

You are developing a SQL Server Integration Services (SSIS) project that copies a large amount of rows from a SQL Azure database. The project uses the Package Deployment Model. This project is deployed to SQL Server on a test server.

You need to ensure that the project is deployed to the SSIS catalog on the production server.

What should you do?

To facilitate the troubleshooting of SQL Server Integration Services (SSIS) packages, a logging methodology is put in place.

The methodology has the following requirements:

* The deployment process must be simplified.

* All the logs must be centralized in SQL Server.

* Log data must be available via reports or T-SQL.

* Log archival must be automated.

You need to configure a logging methodology that meets the requirements while minimizing the amount of deployment and development effort.

What should you do?

You are designing an enterprise star schema that will consolidate data from three independent data marts. One of the data marts is hosted on SQL Azure.

Most of the dimensions have the same structure and content. However, the geography dimension is slightly different in each data mart.

You need to design a consolidated dimensional structure that will be easy to maintain while ensuring that all dimensional data from the three original solutions is represented.

What should you do?

- Select Question Types you want

- Set your Desired Pass Percentage

- Allocate Time (Hours : Minutes)

- Create Multiple Practice tests with Limited Questions

- Customer Support

Currently there are no comments in this discussion, be the first to comment!