Free Linux Foundation CKS Exam Dumps

Here you can find all the free questions related with Linux Foundation Certified Kubernetes Security Specialist (CKS) exam. You can also find on this page links to recently updated premium files with which you can practice for actual Linux Foundation Certified Kubernetes Security Specialist Exam. These premium versions are provided as CKS exam practice tests, both as desktop software and browser based application, you can use whatever suits your style. Feel free to try the Certified Kubernetes Security Specialist Exam premium files for free, Good luck with your Linux Foundation Certified Kubernetes Security Specialist Exam.MultipleChoice

Cluster:scanner

Master node:controlplane

Worker node:worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $kubectl config use-context scanner

Given:

You may use Trivy's documentation.

Task:

Use the Trivy open-source container scanner to detect images with severe vulnerabilities used by Pods in the namespacenato.

Look for images withHighorCriticalseverity vulnerabilities and delete the Pods that use those images.

Trivy is pre-installed on the cluster's master node. Use cluster's master node to use Trivy.

OptionsMultipleChoice

You can switch the cluster/configuration context using the following command:

[desk@cli] $kubectl config use-context dev

A default-deny NetworkPolicy avoid to accidentally expose a Pod in a namespace that doesn't have any other NetworkPolicy defined.

Task: Create a new default-deny NetworkPolicy nameddeny-networkin the namespacetestfor all traffic of type Ingress + Egress

The new NetworkPolicy must deny all Ingress + Egress traffic in the namespacetest.

Apply the newly createddefault-denyNetworkPolicy to all Pods running in namespacetest.

You can find a skeleton manifests file at /home/cert_masters/network-policy.yaml

OptionsMultipleChoice

Context:

Cluster:gvisor

Master node:master1

Worker node:worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $kubectl config use-context gvisor

Context:This cluster has been prepared to support runtime handler, runsc as well as traditional one.

Task:

Create a RuntimeClass namednot-trustedusing the prepared runtime handler namesrunsc.

Update all Pods in the namespace server to run onnewruntime.

OptionsMultipleChoice

You can switch the cluster/configuration context using the following command:

[desk@cli] $kubectl config use-context prod-account

Context:

A Role bound to a Pod's ServiceAccount grants overly permissive permissions. Complete the following tasks to reduce the set of permissions.

Task:

Given an existing Pod namedweb-podrunning in the namespacedatabase.

1. Edit the existing Role bound to the Pod's ServiceAccounttest-sato only allow performing get operations, only on resources of type Pods.

2. Create a new Role namedtest-role-2in the namespacedatabase, which only allows performingupdateoperations, only on resources of typestatuefulsets.

3. Create a new RoleBinding namedtest-role-2-bindbinding the newly created Role to the Pod's ServiceAccount.

Note: Don't delete the existing RoleBinding.

OptionsMultipleChoice

You can switch the cluster/configuration context using the following command:

[desk@cli] $kubectl config use-context dev

Context:

A CIS Benchmark tool was run against the kubeadm created cluster and found multiple issues that must be addressed.

Task:

Fix all issues via configuration and restart the affected components to ensure the new settings take effect.

Fix all of the following violations that were found against the API server:

1.2.7authorization-modeargument is not set toAlwaysAllow FAIL

1.2.8authorization-modeargument includesNode FAIL

1.2.7authorization-modeargument includesRBAC FAIL

Fix all of the following violations that were found against the Kubelet:

4.2.1 Ensure that theanonymous-auth argumentis set to false FAIL

4.2.2authorization-modeargument is not set to AlwaysAllow FAIL (UseWebhookautumn/authz where possible)

Fix all of the following violations that were found against etcd:

2.2 Ensure that theclient-cert-authargument is set to true

OptionsMultipleChoice

SIMULATION

Enable audit logs in the cluster, To Do so, enable the log backend, and ensure that

1. logs are stored at /var/log/kubernetes/kubernetes-logs.txt.

2. Log files are retained for 5 days.

3. at maximum, a number of 10 old audit logs files are retained.

Edit and extend the basic policy to log:

1. Cronjobs changes at RequestResponse

2. Log the request body of deployments changes in the namespace kube-system.

3. Log all other resources in core and extensions at the Request level.

4. Don't log watch requests by the "system:kube-proxy" on endpoints or

OptionsMultipleChoice

SIMULATION

Before Making any changes build the Dockerfile with tag base:v1

Now Analyze and edit the given Dockerfile(based on ubuntu 16:04)

Fixing two instructions present in the file, Check from Security Aspect and Reduce Size point of view.

Dockerfile:

FROM ubuntu:latest

RUN apt-get update -y

RUN apt install nginx -y

COPY entrypoint.sh /

RUN useradd ubuntu

ENTRYPOINT ["/entrypoint.sh"]

USER ubuntu

entrypoint.sh

#!/bin/bash

echo "Hello from CKS"

After fixing the Dockerfile, build the docker-image with the tag base:v2

To Verify:Check the size of the image before and after the build.

OptionsMultipleChoice

SIMULATION

Create a RuntimeClass named untrusted using the prepared runtime handler named runsc.

Create a Pods of image alpine:3.13.2 in the Namespace default to run on the gVisor runtime class.

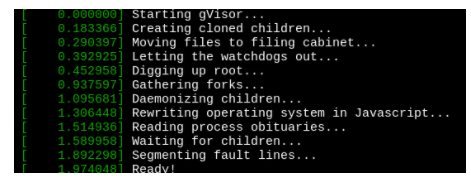

Verify: Exec the pods and run the dmesg, you will see output like this:-

MultipleChoice

SIMULATION

Create a network policy named allow-np, that allows pod in the namespace staging to connect to port 80 of other pods in the same namespace.

Ensure that Network Policy:-

1. Does not allow access to pod not listening on port 80.

2. Does not allow access from Pods, not in namespace staging.

OptionsMultipleChoice

SIMULATION

A container image scanner is set up on the cluster.

Given an incomplete configuration in the directory

/etc/Kubernetes/confcontrol and a functional container image scanner with HTTPS endpoint https://acme.local.8081/image_policy

1. Enable the admission plugin.

2. Validate the control configuration and change it to implicit deny.

Finally, test the configuration by deploying the pod having the image tag as the latest.

Options